Style transfer is a deep learning method for image generation. In this article, we will get an overview and a ready-to-use implementation of this type of model. We highlight that it can be used as a data augmentation technique.

What is style transfer?

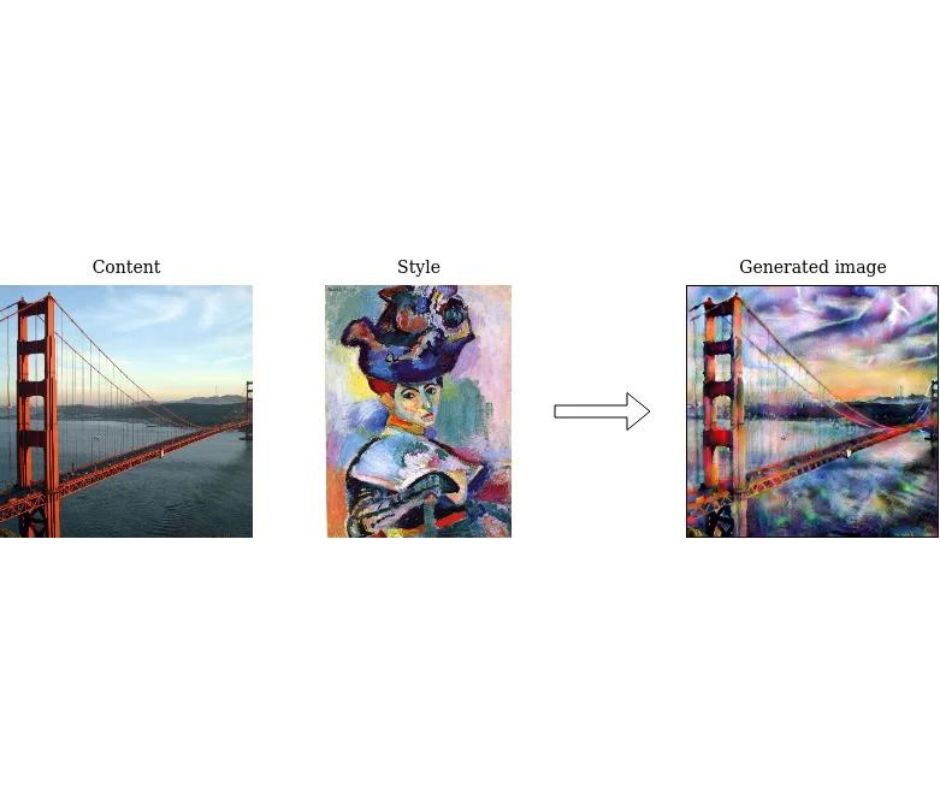

Style transfer, as its name suggests, is a deep learning method to transfer the style of an image to another one. It is an image-generation technique that focuses on domain shift. The new image has the style of the first with the content of the second. The visual style of an image refers to the texture, color, or painter mode used. Concretely, you can be the first to discover today that Matisse painted the famous San Francisco bridge, several decades after his death! In the below example, we see the well-known Woman with a Hat from Matisse. On the contrary, the content is more linked to the shape of the objects or persons seen in the scene. In the below example, you can see that the generated image has the bridge as content and the style of the Woman with a Hat. The new image is however distinct from the two input images.

The first breakthrough implementation of style transfer was in 2015 [2]. It focused on fine art and the transfer of a painting style in a photo. It was based on the convolutional neural network VGG. Style transfer is still being worked on in scientific research, yet this category of models starts to have applications in the industry.

The most straightforward application is image and photo editing. In fact, some very popular image online image editing tools (e.g. Phosus) are based on style transfer. From a broader perspective, style transfer becomes a new tool for art generation. However, they can be relevant in any application dealing with deep learning. Here is how.

Style transfer as data augmentation

Deep learning models need a huge amount of data to be efficient, and their performance is closely linked to the quantity and quality of the dataset. Data science pipelines almost always include pre-processing and transformation steps. Data augmentation is a widely used strategy to increase the amount of data and more importantly its representativeness, thus helping model generalization. Basic data augmentation techniques include rotation, translation, cropping, flipping, scaling, deformation, and colorization. More advanced and state-of-the-art methods focus on image-generation techniques with GAN and style transfer models.

Style transfer: A brief technical overview

Style transfer models are based on deep learning algorithms such as the well-known Generative Adversarial Networks (GAN) and the efficient encoder-decoder algorithms. We will now have a look at a central method, widely used and the root of many other models: Whitening and Color Transform (WCT). This key work lies on a simple idea that we describe here.

a. The first step is to train a decoder network to reconstruct images after a feature extractor model. Basically, in this pipeline, we go from an input image to a vector embedding of the image, to the input image again. This trivial step is the core of every encoder-decoder network. That way, they pre-train five models.

b. To this reconstruction method, a WCT module is added to integrate style transfer. This is the core of the strategy that takes as input two images and outputs one image with the content of the first and the style of the second.

c. The final model is the combination of five such layers, arranged sequentially.

From this, we understand that we only need one content image and one style sample to get a new image combination of both! However, some models need a semantic map as a third input. They use it to know which parts of the content image are to keep and which ones have to be style changed.

Going beyond images

So far, we have only talked about transferring the style of a painting into an image. However, plenty of style transfer models have been trained for other data types. One may be interesting for your next project! Still focusing on images, PhotoWCT derived from the described WCT, enables to have two photos as input and a photorealistic output. The root idea is to add constraints in the pipeline, and it is why such models are called strongly constrained.

Some other models are specialized with specific data: portraits, light exposition shifts, ... Some works are focusing on videos. They add consistency between each frame. Finally, you can transfer style between texts [4] or musics [5].

How to get started with the code?

The easiest way to get started is to begin with an already existing implementation. We give here a python example based on the FastPhotoStyle GitHub repository. This implementation comes from the WCT model presented before.

1. The needed packages come from PyTorch, PIL, pathlib, and typing. There are basic deep-learning packages. We import them:

2. We download 4 files from the GitHub repository. The PhotoWCT model is the network used to transfer style. This is the core of the implementation and works accordingly to what we explained earlier for WCT. The Propagator class suppresses the distortion in the stylized image by applying an image smoothing filter. We also import some pre-trained weights. Finally, we download the process_stylisation implementation to apply the wanted style to the new image. And import these files (the weights are called inside the PhotoWCT model):

3. We define a load_model_wct method to load the photo WCT weights downloaded and take into account your CUDA configuration.

4. We define a style transfer method to generate a new image from two input images and a photo WCT model :

5. We can finally generate a new image based on two input images:

We have seen how to generate images based on the photo WCT style transfer method. Besides, you know that this image generation strategy can be useful as a data augmentation step. You only have to get started now! You can find below some useful references to go further.

References

[1] Li, Yijun, et al. "Universal style transfer via feature transforms." Advances in neural information processing systems 30 (2017).

[2] Gatys, Leon A., Alexander S. Ecker, and Matthias Bethge. "A neural algorithm of artistic style." arXiv preprint arXiv:1508.06576 (2015).

[3] Tumanyan, Narek, et al. "Splicing ViT Features for Semantic Appearance Transfer." Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2022.

[4] Kashyap, Abhinav Ramesh, et al. "So Different Yet So Alike! Constrained Unsupervised Text Style Transfer." arXiv preprint arXiv:2205.04093 (2022).

[5] Koo, Junghyun, et al. "Music Mixing Style Transfer: A Contrastive Learning Approach to Disentangle Audio Effects." arXiv preprint arXiv:2211.02247 (2022).

Are you looking for AI Experts? Don't hesitate to contact us!